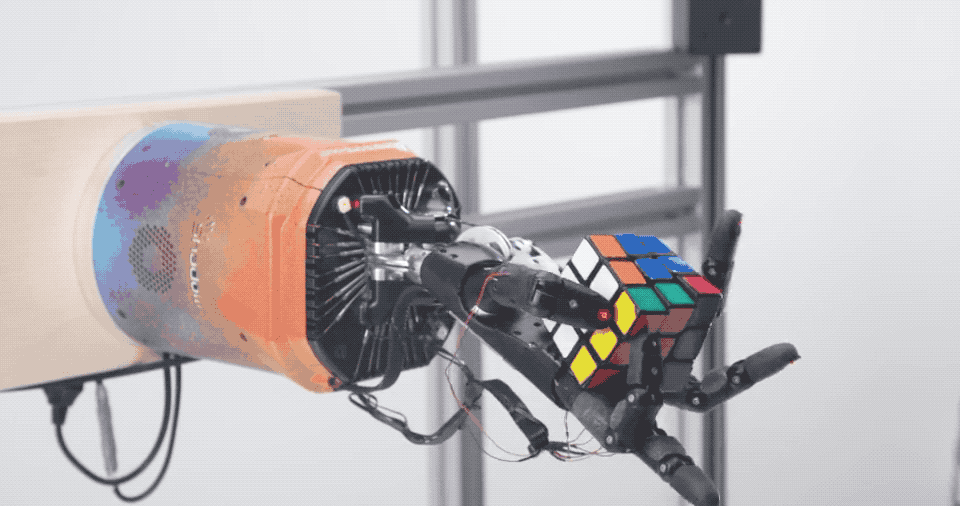

The internet is brimming with clips of robots doing parkour, solving Rubik's cubes, and engaging in general tomfoolery. It might look like they're ready to take over the world, but in reality, robots are big piles of hardware that can't do much unless we spell things out for them.

OpenAI's robot Dactyl allegedly solved a Rubik's cube in 30 seconds, but I think Sam just sped the video up

Robots don't think like humans; they follow instructions. So to get them to do anything meaningful, we have to break down the thought process behind problem solving into step-by-step instructions, or in more technical terms: 'algorithmize the task'. That's because even something as mundane as picking up a glass of water involves dozens of calculations, like grip force, balance, and the motion of the liquid. Your brain handles all of this instinctively thanks to years of experience, but robots don't have intuition.

And no, slapping a GPU on your Roomba and plugging it into ChatGPT won't magically make it smart. Generative AI is great at spotting patterns in large datasets, but in robotics we're mostly dealing with real-time sensor readings. For that, a much simpler approach does the trick.

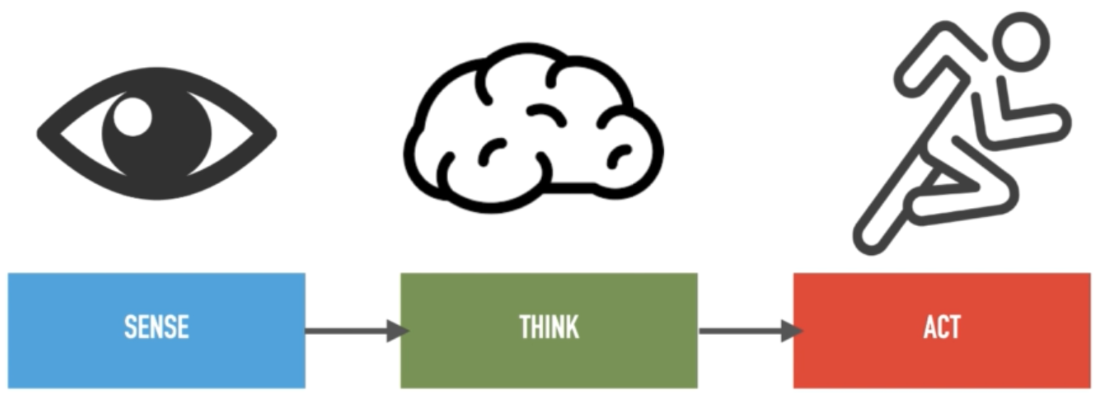

Most robots follow a simple pattern to interact with the world. This pattern, called the sense-think-act loop, is what allows robots to function in dynamic environments. Here's how it works:

Sense: Sensors, or devices that measure something, gather information from the environment

Think: With that information, the robot calculates what to do next

Act: The robot does something in response (usually by moving something like an actuator)

This loop repeats continuously, allowing robots to react and adapt to their surroundings in real time.

This process may sound familiar because it's actually how humans interact with the world. Our brains process sensory input—like sight, sound, and touch—then make decisions and send signals to our muscles to move. If you touch a hot stove, for instance, the nerves in your skin immediately send a pain signal to your brain, which processes it as "SOMETHING REALLY HOT AHHH" and instructs your hand to pull away. Robots kinda do the same thing, just with a different manner of brain and muscles.

Let's take a robot vacuum for example. It uses something called an infrared sensorAn IR sensor shoots a beam of energy forward and measures the time it takes for that light to travel back. From that time and the strength of the beam of light, the distance to the nearest object can be calculated. These sensors are really common in robotics since they're a simple and cheap method of measuring accurate distance on the fly. to detect nearby objects. If nothing's within 10 centimeters, the robot sends a signal to it's motorized wheels to move forward. If it senses something, it stops, turns 90°, and continues. And with just that logic, it will eventually clean your whole floor (assuming there aren't any stairs, you might need AI for that). The same basic process applies to any robot, whether it's a humanoid navigating obstacles or a Roomba cleaning your floor - just with way more sensors, computers, and actuators.

A roomba vacuuming a room using a unique but adorable puppy sensor

So next time you see a robot doing something cool online, don't credit the robot. Tip your hat to the grad students who translated something impossibly human into motion with a $30 budget.